Why in the blue hell would someone do such a thing?

Scenes usually have multiple lights. Complex scenes have lights in the range of 400–800 or more. If each pixel tries to calculate the light even if it is not in the light range, then real-time rendering goes for a toss. Hence, an efficient method is needed to group the effective lights.

How I did it

- Broke the scene into clusters, created their AABBs.

- Tested the lights against the cluster’s AABB.

- Found the cluster for the pixel.

- Got the lights in the cluster and

Please note, this might hit the frame rate if additional optimizations such as culling, trimming, chopping etc. are missing. In my case, the frame rate dropped drastically, and it was expected as mine is a bare minimum renderer, immature as of now plus the light data was getting passed in each frame. The whole algorithm works in view space, although I wonder how it would behave if it’s moved to clip space as AABB creations might be simpler in clip space.

Broke the scene into clusters

Let’s go in the z direction first. Used, Tiago Sousa’s equation (in Medium how am I supposed to write mathematical formulas mathematically..!!) :

Z=NearZ * pow((FarZ/NearZ), (slice/numSlices)) .......... eq#1The frustum in Z direction was broken into slices which grew exponentially. The slices nearer to the camera were shorter compared to the far ones, this allowed more precision near the eye as no one cares what happens in Arctic.

The frustum was broken into Z slices and those slices were further broken in X, Y direction to create clusters.

Let’s assume a 3x3 grid in X, Y and 6 slices in Z. In order to get the X, Y coordinates on Z slices the screen coordinates projected onto the camera near plane were used. So (a, b, 0) in screen space gets converted to (a`, b`, camZnear) in view space. In Vulkan, the clip space Z range is [0,1], hence 0 instead of -1 as screen space Z. Each cluster’s corner coordinates in screen space were calculated using predetermined cluster width and height, 80 in my case.

Let’s collect the known entities (all of them, in view space).

- Camera position in view space becomes origin.

- The Z distance of near and far plane of each Z slice.

- The X, Y coordinates on the camera near plane, using these coordinates a ray is cast (starting from origin) which will hit the Z planes (near and far) of the Z slice.

The triangles OST and OPQ are congruent, hence we get d and coordinates of Q.

d=c * (a/b)

Similarly, we determine the other 3 points on the Z near slice, using the above equation. A total of 8 points on near and far slice gives us a trapezoid. In my case, the midpoint of the min points on both slices were used to create the min point for the AABB. Similarly, the max point for the AABB was determined. It gave non overlapping AABBs, which were easier to mentally visualize while debugging.

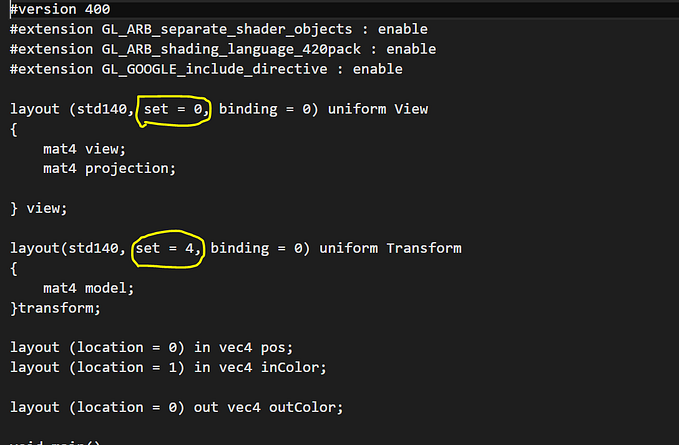

The above theory needs to be implemented on the machine. The machine in my case, was GPU because it’s more suited for this operation with its multiple cores and my LinkedIn profile has GP/GPU :). The Vulkan compute with dispatch size equal to number of clusters, 16*9*24 in my case, with 1 thread (local size 1,1,1) representing a single cluster. The two major components of this shader were conversion of screen position to view position and finding the AABBs as explained above. The space conversion used the inverse of the projection matrix.

//Convert to NDC

vec2 texCoord = screen.xy / vec2(scene.screenWidth, scene.screenHeight);

//Convert to clipSpace

vec4 clip = vec4(vec2(texCoord.x, 1.0 - texCoord.y)* 2.0–1.0, screen.z, screen.w);

//View space transform

vec4 view = inverse(scene.projection) * clip;

//Perspective projection

view = view / view.w;Assigned lights to clusters

The basic AABB collision detection, on a compute shader, gave lights per cluster. A work group represented a cluster and each invocation in the group represented a single light. The light’s AABB or bounding sphere was tested against the cluster’s AABB.

shared int lightList[MAX_LIGHTS];The threads in a workgroup shared the above array to update the collision results, without overwriting each other’s updates as every index belonged to a thread/light. The collisions are well explained here 3D collision detection — Game development | MDN (mozilla.org). Post collision detection, the usage of barriers, assisted to collate the lights present in the cluster. The max lights per cluster had been set to 7.

Found the cluster for the pixel

The fragment shader received the light (color, position, etc.) and cluster data (AABB, light indices in cluster, light count). In order to find the lights affecting the fragment, it’s respective cluster was determined. The major components regarding light selection were conversion of screen coordinates to view space and finding the cluster of the fragment. The former is same as explained earlier. In order to find the cluster, the Z index was calculated using the eq#1 mentioned at the start, here slice being the unknown variable.

int zSlice = int(numZSlices * log(abs(viewSpacePos.z/camZNear))/log(camZFar/camZNear));Care is required with X and Y cluster indices as the X,Y origin (top left corner) for each slice is different because of the trapezoid shape.

uint topLeftClusterIndex = zSlice * numClustersX * numClustersY;The extreme X,Y values within the Z slice were calculated by using the indices of the corner clusters. The cluster width and height were calculated as below.

float clusterWidth = (xmax-xmin)/numClustersX;

float clusterHeight = (ymax-ymin)/numClustersY;The fragment’s X,Y cluster index was calculated as below. The formula is simple, numDivisions being the unknown.

startPos + divisionValue * numDivisions = viewPos.(x or y)

int clusterXIndex = int((viewSpacePos.x - topLeftClusterPos.x)/clusterWidth);

int clusterYIndex = int(abs(viewSpacePos.y - topLeftClusterPos.y)/clusterHeight);The last stage transformed the 3d index of the cluster to 1d index to get the lights of the cluster and use it for light calculations.

There is a lot of scope for optimizations, hopefully the scope gets explored in future.

Code:

quad-bit/Loops2: Vulkan renderer with rendergraph (github.com)

References:

A Primer On Efficient Rendering Algorithms & Clustered Shading. (aortiz.me)

https://www.youtube.com/watch?v=uEtI7JRBVXk&pp=ygURY2x1c3RlcmVkIHNoYWRpbmc%3D